Solving the Traveling Salesman Problem with Reinforcement Learning

Reinforcement Learning (RL) is usually applied for state of the art AI research and often make the headlines. Yet it still fails to deliver on concrete business topics. At Ekimetrics we strive to transfer AI innovations into the business world and Reinforcement Learning is a unbelievable playground to find disruptive solutions to complex real-world problems. In particular, there are many optimization problems that could be solved using RL.

The Traveling Salesman Problem (TSP) has been solved for many years and used for tons of real-life situations including optimizing deliveries or network routing . This article will show a simple framework to apply Q-Learning to solving the TSP , and discuss the pros & cons with other optimization techniques. It's a perfect introduction for beginners in Reinforcement Learning and does not require heavy computational capabilities.

You can find all the code open sourced here on Github

The Traveling Salesman Problem

The Traveling Salesman Problem (or TSP) is a typical optimization problem, where one has to find the shortest route to visit different cities. There are many different ways to solve this problem using discrete optimization techniques.

Like many optimization problems, it's a NP-hard problem, which in short means that it's easy (in terms of speed) to solve for 5 cities, already impossible to brute force for 50. And almost impossible for most algorithms for 5,000 cities. Even for 15 cities, it's already 1 trillion permutations to compute (15!), there are optimization techniques that are more adequate : dynamic programming, branch and bound algorithms, nearest neighbors approximations, or ant colonies optimizations.

Furthermore, the problem described here is too simple to describe a real-life delivery situation. It does not take into account multiple vehicle fleet, electric vehicle charging, time window constraints, capacity constraints, aleatory perturbations, etc... Hence, each variant of the TSP has its own optimization frameworks (in terms of variables and constriants), and the more you complexify the problem, the more difficult it is of course. That's why in practice delivery companies use combinations of those variants coupled with heuristics. The most advanced companies today add Machine Learning techniques on top of those algorithms, in particular to replace manual heuristics and better estimate in-context durations.

In Python, the easiest way to get started with TSP and its variants is probably the great open source library OR-Tools by Google . And if you want to learn more about discrete optimization, I can only recommend the great MOOC on Discrete Optimization by the University of Melbourne you can find on Coursera .

Applying Reinforcement Learning to the TSP

Why bother using reinforcement learning .

If it's already solved by classical optimization techniques, why bother using Reinforcement Learning? Well several answers:

- It's fun and I personally love practicing RL. Curiosity is a great driver for innovation, and here I was really wondering if it could be applied for such a problem.

- RL is rarely used in real-life problems. Playing games or manipulating robot hands is awesome, but when it comes to business problems, RL often fails compared to simple heuristics or algorithms. At Ekimetrics, we always look for applying state-of-the-art AI research to the business problems we encounter in the real-world.

However, Reinforcement Learning (in theory) would hold many advantages compared to classical optimization techniques :

- Offering a general framework for all problems , indeed instead of tweaking the constraints and defining extra variables, you can change the reward, and defining a multi agent problem if needed for fleet optimization. Adding extra information like delivery time estimation is also eased if you can integrate the prediction algorithm with a similar ML techniques (e.g. Neural Networks)

- Having a "live" decision making algorithm . Because you would train a RL alorithm by making the next delivery decision at each stop, compared to "offline" optimization algorithms that study the problem with no unknowns, you inherently would be able to take different decisions if something happened during the experience, whereas you would need to recalculate with classical techniques

- Being robust to unknowns and aleatory perturbations

Transforming the TSP to a RL problem

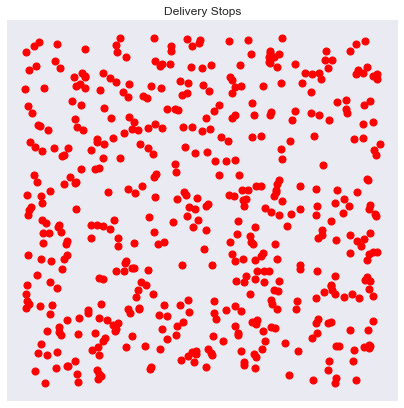

Before jumping into the TSP variants, let's take the most simplest version: a delivery man who has to deliver 50 packages . Between each stop can be defined a distance or a duration (or a more complex metric).

To transform it to a RL problem, we need to define:

- Agent : the delivery man

- Environment : the different packages to deliver (and their destination) and the city where to navigate

- States : the location where the delivery guy currently stops

- Actions : at each location, the decisions to make (modeled as a Markov process) are: "where to go next" (or "which location do I chose next")

- Reward : Between two locations (states), how long (or how far) it is

Creating the routing environment

Creating a simple version of the environment is quite simple in pure Python. Indeed, you can store the position of the stops in a 2D virtual world as a numpy array or a Pandas DataFrame. Distances between stops can be calculated with an euclidean distance, but it could be complexified to account for durations or represent any distance/duration metric you would see in a routing network.

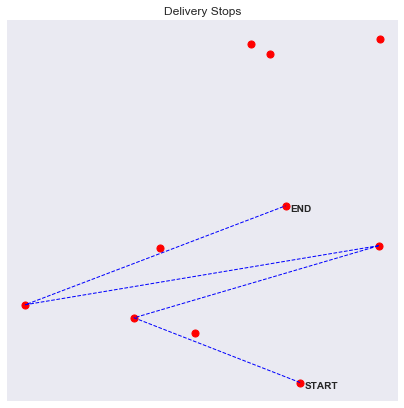

Then we want to define the starting position, the route, and the end position. Meaning that if we have to traverse A, B, C, D, E, possible routes would be for example A->C->B->E->D or B->D->E->C->A .

By the way with 5 stops you have 5! possible routes or 120 possible routes. For 10 stops you already have 3.6 million possible routes. For 100 stops it's 10 to the 158th power routes. It's called the combinatory explosion, and that explains why this simple problem is impossible to brute force for a high number of stops.

We can visualize routes by drawing lines between each stop

We can also already imagine more complex and realistic situations. For example, one experiment I wanted to do was to stress test the RL algorithm to aleatory events. For example a known environment with unknown perturbations, like traffic. To model this interaction, we add a "traffic zone" inside our environment, where routes are much slower if taken. To account for the extra duration, we calculate the intersection points of the route within the "traffic zone" and measure the distance of the formed segment, we then use that distance weighed with a traffic intensity factor.

Designing the Q-Learning algorithm

Before jumping into complex Deep Learning, I wondered if a simple Q-Learning framework would work . Basically with Q-Learning you want to evaluate a matrix mapping states, actions and rewards. Indeed to make a decision in a given state about the best actions to do, you would love to have an estimate if the decision was the best in the long term. This is represented by the Q values.

In our case, the rows are the different states (all the stops) and the columns the possible actions to take in this state, hence the next stop to go. The values are the estimated long-term reward you would get by taking this action. So, if you are found in a state A, you would like to take the action with the maximum Q value.

For our TSP problem for example, we would have a Q-Matrix of 50 by 50 if we have 50 stops.

Because we want to inform the routing algorithm with as much unbiased data we can find, we can actually initalize the Q matrix (also called Value function because it maps out states and actions to rewards - the values) with the distance matrix between all stops. Indeed, if you would not consider a long-term decision making strategy, you would apply a greedy one where you would chose the closest stop as a next destination. Yet it would definetely be better than random.

Ok, but how to update those values and incorporate long-term planning? This is exactly the goal of the Q-Learning algorithm. Imagine you are delivering pizzas every day all across the city, you have an old GPS to help you decide the shortest route between your stops. But your city is much more complicated than what you and your GPS know. What would happen?

- The first day, you would chose the closest stop first and then jump to the next closest one. You will quickly realize that it's not optimized. You should maybe have gone first to another neighborhood and deliver everything in the area before jumping to another one.

- So the next day, you commit to another strategy, armed with yesterday's experience. Definitely, looking ahead and avoiding single deliveries in remote areas even if they are closest is a better idea. But while you are exploring the best routes, you pass through the city center and get blocked into traffic that terribly slows you down.

- The 3rd day, you will try to apply the same general strategy, but definitely if possible you will never go through the city center, and try circling around the city to save some precious time.

This trial-and-error behavior is basically how experience replay works in the Reinforcement Learning framework .

If we code an agent abstraction, it could look like this:

The most important line is this one:

This is the update rule in basic Q-Learning, where you increment the Q-value for each action in each experience replay (simulating a day of delivery hundreds of time) by the current reward + the reward for the best possible action you would take in the future. This recursive equation is a variation of the famous Bellman equation for Q value functions.

Notice two factors:

- The learning rate lr control the learning speed (like in Deep Learning)

- The gamma factor gamma is the discount factor, and control long-term planning. Indeed if gamma = 0, you will only get the next action reward (your agent is "short sighted" only seeking current rewards), if gamma = 1 you will be more oriented towards the future rewards (above 1 it may diverge)

Those two factors are our most important hyperparameters to tune during training (there are others like the epsilon variables defined in the initialization, if you are curious take a look at epsilon-greedy methods, a super simple way of tackling the exploration-exploitation dilemma in Reinforcement Learning)

Writing the training loop

Once we have define the environment, the Q-Agent and the update rules for the value function, we only need the final step: the training loop. We will apply experience replay to our poor delivery guy stucked in an infinite time loop.

Every day, over and over, he will try to deliver our 50 packages, find the best routes and do it all over the next day. In Reinforcement Learning we call each day an episode , where we simply:

- Reset the environment

- Make a decision of the next state to go to

- Remember the reward gained by this decision (minimum duration or distance elapsed)

- Train our agent with this knowledge

- Make the next decision until all stops are traversed

Q-Learning for a simple TSP

When you start training and experiencing the same problem overtime, your agent learns about the environment, this is shown by the episode rewards values for each experience replay.

- In the first phase, until the 400th episode, you are still exploring the different routes. Indeed with the epsilon decay method (with epsilon_decay=0.999 ), you are taking random actions at each step.

- In the second phase, epsilon is lowering, and you start exploiting what you have learnt, and take less and less random actions to be more driven by Q values.

What's tricky with epsilon-greedy methods, is that it kind of forces of the convergence. So did it work? Let's see.

50 stops experiment

100 stops experiment , 500 stops experiment .

In each experiment, the algorithm converges quite fast to a seamingly acceptable route. After exploring a lot of options it not only gives one route but variations of the accepted strategy, which can already be interesting to find alternatives.

Q-Learning for a TSP with traffic zones

Now that we have our environment, agent and framework defined, what's great with RL is that we don't have to change anything but the reward to model a different situation. Indeed because we tweaked the reward when you drove through a traffic zone, the agent will learn the same way to optimize his holistic route.

100 stops experiment with traffic zones

Eureka, the agent will avoid as much as possible the traffic zones

500 stops experiment with traffic zones

With more points, it's even more interesting, the agent will really circle around the traffic zone and prefer longer but faster routes.

Next steps

I hope this simple experiment has highlighted how to apply (non-Deep Learning) Reinforcement Learning techniques to real-life problems. I haven't had time to benchmark the resolution against other optimization techniques (which I should have done I confess), but let's try to draw some pros and cons for the approach.

- Probably slower

- Definitely less accurate than a discrete optimization technique

- General framework to be updated in real-life situations (eg: the traffic) and extended to more complex problems

- Alternative routes are proposed

- "Online" decision making (meaning that you have an algorithm armed with a next-best decision recommendation system)

Next steps is to extend the work to an even more global framework to account for multiple vehicle fleets, charging stations and more. The latter idea will require to use Deep Reinforcement Learning because states could not be represented as a matrix, and will probably be more difficult (impossible?) to train, but that's a topic for a next article!

References

- All the code is open sourced here on Github

- OR-Tools open source library by Google

- MOOC on discrete optimization by the University of Melbourne on Coursera

- The timeless MOOC on Reinforcement Learning by David Silver at Deepmind

- The Traveling Salesman Problem

- Why bother using Reinforcement Learning?

- Transforming the TSP to a RL problem

- Creating the routing environment

- Designing the Q-Learning algorithm

- Writing the training loop

- Q-Learning for a simple TSP

- Q-Learning for a TSP with traffic zones

Scientists Solve Most Complex Traveling Salesman Problem Ever

See how they cracked the deceptively simple—but brutally tough—problem for 22 "cities."

- The traveling salesman is an age-old exercise in optimization , studied in school and relevant to "real life."

- Rearranging how data feeds through the processor allows more than one thread to compute at a time.

Scientists in Japan have solved a more complex traveling salesman problem than ever before. The previous standard for instant solving was 16 “cities,” and these scientists have used a new kind of processor to solve 22 cities. They say it would have taken a traditional von Neumann CPU 1,200 years to do the same task.

The traveling salesman problem is centuries old, and it asks a deceptively simple question: For a salesman with a map of, say, 10 cities with given distances apart and roads connecting them, what’s the shortest path for the salesman to travel to every city and return home? The “deceptive” part is that the math to support the problem quickly grows overwhelmingly complex.

Each leg of the journey has a different length. Not all the cities connect to each other to form an ideal full star topology. And segments that connect, say, five cities have to somehow be compared to segments that connect three or eight. It’s just too variable, in both the proverbial and literal senses. A computer processor must calculate one full route at a time while storing the order of the points touched and the length of each leg of the journey.

The problem combines graph theory (the “map” of points with weighted legs between them) with combinatorics (the different ways you can count through a graph, in this case) and optimization (choosing the best, shortest route from a given graph). Because of this robust subject appeal, it’s been a favorite exercise in math and computer science classes of all kinds for many, many years.

The secret to these researchers’ success with the traveling salesman problem is in the special circuit they designed to calculate the routes. In a traditional computer processor, the routes must be arranged and then calculated and all passed through the processor one point at a time—the system is linear.

In most computing applications, we don’t notice that processing happens this way because the calculations are so rapid that they basically appear simultaneous. But the traveling salesman problem clogs the works because the number of calculations required is so huge. Adding more points on the map only increases the complexity. (Honestly, this news itself underlines how complicated the problem is: It’s major news to be able to solve just 22 cities instead of just 16!)

The researchers took a traditional circuit architecture and changed one critical thing: They rearranged special “spin cells” representing all the graph points so that the spin cells could all communicate with each other, not just the immediate surroundings connected by lines on the graph. This new arrangement allows routes to be made using multiple points at a time instead of just one, with fewer bottlenecks in the computational process.

The potential applications of a more powerful salesman solver are myriad. The abstract problem is infamous because it’s so widely studied and difficult, but its roots are still as an abstraction of a real person’s dilemma: How do I do my job the most efficient way? Every day, taxi and Uber drivers must consider the best route to find the most passengers. Delivery drivers must arrange their addresses in an efficient way. And these applications don’t just involve minimizing distance—fresh food or the value of a fare add even more complexity.

Suddenly, the 22nd address on the route is going to receive a much hotter pizza.

Caroline Delbert is a writer, avid reader, and contributing editor at Pop Mech. She's also an enthusiast of just about everything. Her favorite topics include nuclear energy, cosmology, math of everyday things, and the philosophy of it all.

.css-cuqpxl:before{padding-right:0.3125rem;content:'//';display:inline;} Science .css-xtujxj:before{padding-left:0.3125rem;content:'//';display:inline;}

This Patient Had a COVID Infection for Two Years

Scientists Are Building a Noah’s Ark for Corals

Gravity Might Reverse—or Undo—the Big Bang

Bottled Cherries Found in George Washington Cellar

Covering Fields in Concrete Could be a Good Thing

A New Study Unveils ‘Twisters’ Inside Egg Cells

A New Theory Says Dark Matter Shaped the Universe

Alexander the Great Portrait Has Dazzled Experts

This Star Explodes Every 80 Years. And Survives.

Does This Evidence Proves Life is a Simulation?

What To Do When Your Pet Octopus Has 50 Hatchlings

Key Products

- Route Optimization API

Optimize routing, task allocation and dispatch

- Directions and Distance Matrix API

Calculate accurate ETAs, distances and directions

- Navigation API & SDKs

Provide turn-by-turn navigation instructions

- Live Tracking API & SDKs

Track and manage assets in real time

- All Products

Platform Overview

Learn about how Nextbillion.ai's platform is designed

Product Demos

See NextBillion.ai APIs & SDKs In action

- Integrations

Easily integrate our products with your tools

Is NextBillion.ai right for you?

Understand if NextBillion.ai is right for your business

NextBillion.ai vs. Google Cloud Fleet Routing

NextBillion.ai vs. Google Distance Matrix API

NextBillion.ai vs. HERE Technologies

Technical Documentation

Snap-to-Road API

Snap GPS tracks to underlying road networks

Isochrone API

Define serviceable areas based on travel times

Optimization

Clustering API

Group data points based on their characteristics

Geofencing API

Mark virtual boundaries & track asset movements

Live Tracking SDK

Real-Time asset tracking on Mobile

Maptiles API

Create immersive visual experience for your customers

Maptiles SDK

Tailor-Made Maps for Mobile Apps

Supply Chain and Logistics

- On-Demand Delivery

- Fleet Management

E-commerce Logistics

- Field Services

Pest Control

Cleaning Services

HVAC Services

Laundry and Dry Cleaning

Ride-Hailing

Urban Mobility

Non Emergency Medical Transport

Employee Transportation

Final Mile Logistics

Public Services

Emergency Services

Waste Management

Supply Chain & Logistics

Get regulation-compliant truck routes

Solve fleet tracking, routing and navigation

- Last-Mile Delivery

Maximize fleet utilization with optimal routes

Real-time ETA calculation

- Middle Mile Delivery

Real-time ETA Calculation

- Ride Hailing

Optimized routes for cab services

- Non-Emergency Medical Transport

Optimize routing and dispatch

Field Workforce

Automate field service scheduling

- Waste Collection

Efficient route planning with road restrictions

Navigate the spatial world with engaging and informative content

- Case Studies

Discover what customers are building in real time with NextBillion.ai

- Product Updates

Latest product releases and enhancements

Join us on our upcoming virtual webinars

Whitepapers

Read through expert insights and opinions from the mapping community

Get in-depth and detailed insights

See NextBillion.ai APIs & SDKs in Action

Experience a more powerful optimization and scheduling platform, better optimized routes, advanced integration capabilities and flexible pricing with NextBillion.ai.

- NextBillion.ai vs Google Cloud Fleet Routing

- NextBillion.ai vs Google Distance Matrix API

- NextBillion.ai vs HERE Technologies

FEATURED BLOG

- API Documentation

Comprehensive API guides and references

Interactive API examples

Integrate tools you use to run your business

- Technical Blogs

Deep-dive into the technical details

Get quick answers to common queries

FEATURED TECHNICAL BLOG

How to Implement Route Optimization API using Python

Learn how to implement route optimization for vehicle fleet management using python in this comprehensive tutorial.

NEXTBILLION.AI

Partner with us

Our story, vision and mission

Meet our tribe

Latest scoop on product updates, partnerships and more

Come join us - see open positions

Reach out to us for any product- or media-related queries

For support queries, write to us at

To partner with us, contact us at

For all media-related queries, reach out to us at

For all career-related queries, reach out to us at

- Request a Demo

Best Algorithms for the Traveling Salesman Problem

- March 15, 2024

What is a Traveling Salesman Problem?

In the field of combinatorial optimization, the Traveling Salesman Problem (TSP) is a well-known puzzle with applications ranging from manufacturing and circuit design to logistics and transportation. The goal of cost-effectiveness and efficiency has made it necessary for businesses and industries to identify the best TSP solutions. It’s not just an academic issue, either. Using route optimization algorithms to find more profitable routes for delivery companies also lowers greenhouse gas emissions since fewer miles are traveled.

In this technical blog, we’ll examine some top algorithms for solving the Traveling Salesman Problem and describe their advantages, disadvantages, and practical uses.

Explore NextBillion.ai’s Route Optimization API , which uses advanced algorithms to solve TSP in real-world scenarios.

Brute Force Algorithm

The simplest method for solving the TSP is the brute force algorithm. It includes looking at every way the cities could be scheduled and figuring out how far away each approach is overall. Since it ensures that the best solution will be found, it is not useful for large-scale situations due to its time complexity, which increases equally with the number of cities.

This is a detailed explanation of how the TSP is solved by the brute force algorithm:

Create Every Permutation: Make every combination of the cities that is possible. There are a total of n! permutations to think about for n cities. Every combination shows a possible sequence where the salesman could visit the cities.

Determine the Total Distance for Every Combination: Add up the distances between each city in the permutation to find the overall distance traveled for each one. To finish the trip, consider the time from the final city to the starting point.

Determine the Best Option: Observe which permutation produces the smallest total distance and record it. This permutation represents the ideal tour. Return the most effective permutation to the TSP as the solution after examining every option.

Give back the best answer possible: Return the most effective permutation to the TSP as the solution after all possible combinations have been examined.

Even though this implementation gives an exact solution for the TSP, it becomes costly to compute for larger instances due to its time complexity, which increases factorially with the number of cities.

The Branch-and-Bound Method

The Branch-and-Bound method can be used to solve the Traveling Salesman Problem (TSP) and other combinatorial optimization problems. To effectively find a suitable space and the best answer, divide-and-conquer strategies are combined with eliminating less-than-ideal solutions.

This is how the Branch and Bound method for the Traveling Salesman Problem works:

Start : First, give a simple answer. You could find this by starting with an empty tour or using a heuristic method.

Limit Calculation : Find the lowest total cost of the current partial solution. This limit shows the least amount of money needed to finish the tour.

Divide : Select a variable that is associated with the subsequent stage of the tour. This could mean picking out the next city to visit.

When making branches, think about the possible values for the variable you’ve chosen. Each branch stands for a different choice or option.

Cutting down : If a branch’s lower bound is higher than the best-known solution right now, cut it because you know that it can’t lead to the best solution.

Exploration : Keep using the branch-and-bound method to look into the other branches. Keep cutting and branching until all of your options have been thought through.

New Ideal Solution : Save the best solution you found while doing research. You should change the known one if a new, cheaper solution comes along.

Termination : Continue investigating until all possible paths have been considered and no more choices exist that could lead to a better solution. End the algorithm when all possible outcomes have been studied.

Selecting the order in which to visit the cities is one of the decision variables for the Traveling Salesman Problem. Usually, methods like the Held-Karp lower bound are used to calculate the lower bound. The technique identifies and cuts branches that are likely to result in less-than-ideal solutions as it carefully investigates various combinations of cities.

The Nearest Neighbor Method

A heuristic algorithm called the Nearest Neighbor method estimates solutions to the Traveling Salesman Problem (TSP). In contrast to exact methods like brute force or dynamic programming, which always get the best results, the Nearest Neighbor method finds a quick and reasonable solution by making local, greedy choices.

Here is a detailed explanation of how the nearest-neighbor method solves the TSP problem:

Starting Point : Pick a city randomly to be the tour’s starting point.

Picking Something Greedy: Select the next city on the tour to visit at each stage based on how close the current city is to the not-explored city. Usually, a selected measurement is used to calculate the distance between cities (e.g., Euclidean distance).

Go and Mark: Visit the closest city you picked, add it to your tour, and mark it as observed.

Additionally: Continue this manner until every city has been visited at least once.

Go Back to the Beginning City: After visiting all the other cities, return to the starting city to finish the tour.

The nearest-neighbor method’s foundation is making locally optimal choices at each stage and hoping that the sum of these choices will produce a reasonable overall solution. Compared to exact algorithms, this greedy approach drastically lowers the level of computation required, which makes it appropriate for relatively large cases of the TSP.

Ant Colony Optimization

ACO, or Ant Colony Optimization, is a metaheuristic algorithm that draws inspiration from ants’ seeking habits. It works very well for resolving a combination of optimization issues, such as the TSP (Traveling Salesman Problem). The idea behind ACO is to imitate ant colonies’ chemical trail communication to determine the best routes.

Ant Colony Optimization provides the following solution for the Traveling Salesman Problem:

Starting Point: A population of synthetic ants should be planted in a random starting city. Every ant is a possible solution for the TSP.

Initialization of The scents: Give each edge in the problem space (connections between cities) an initial amount of synthetic pheromone. The artificial ants communicate with one another via the fragrance.

Ant Motion: Each ant creates a tour by constantly selecting the next city to visit using a combination of fragrance levels and a heuristic function.

The quantity of fragrance on the edge that links the candidate city to the current city, as well as a heuristic measure that could be based on factors like distance between cities, impact the chances of selecting a specific city.

Ants mimic how real ants use chemical trails for communication by following paths with higher fragrance levels.

Update on Pheromones: The pheromone concentration on every edge is updated once every ant has finished traveling.

The update involves placing fragrances on the borders of the best tours and evaporating existing fragrances to copy the natural breakdown of chemical paths, which is intended to encourage the search for successful paths.

Repetition: For the fixed number of cycles or until a shift standard is satisfied, repeat the steps of ant movement, fragrance update, and tour construction.

Building Solution : After a few iterations, the artificial ants develop an answer, which is considered the algorithm’s outcome. This solution approximates the most efficient TSP tour.

Enhancement: To improve progress and solution quality, the process can be optimized by adjusting parameters like the influence of the heuristic function and the rate at which fragrances evaporate.

Ant Colony Optimization is excellent at solving TSP cases by using the ant population’s group ability. By striking a balance between exploration and exploitation, the algorithm can find potential paths and take advantage of success. It is a well-liked option in the heuristics field since it has been effectively used to solve various optimization issues.

Genetic Algorithm

Genetic Algorithms (GAs) are optimization algorithms derived from the concepts of genetics and natural selection. These algorithms imitate evolution to find predictions for complex problems, such as the Traveling Salesman Problem (TSP).

Here is how genetic algorithms resolve the TSP:

Starting Point: select a starting group of possible TSP solutions. Every possible tour that visits every city exactly once is represented by each solution.

Assessment: Examine each solution’s fitness within the population. Fitness is commonly defined in the TSP environment as the opposite of the total distance traveled. Tour length is a determining factor in fitness scores.

Choice: Choose people from the population to be the parents of the following generation. Each person’s fitness level determines the likelihood of selection. More fit solutions have a higher chance of being selected.

Transformation (Recombination): To produce offspring, perform crossover, or recombination, on pairs of chosen parents. To create new solutions, crossover entails sharing information between parent solutions.

Crossover can be applied in various ways for TSP, such as order crossover (OX) or partially mapped crossover (PMX), to guarantee that the resulting tours preserve the authenticity of city visits.

Change: Change some of the offspring solutions to introduce arbitrary changes. A mutation can involve flipping two cities or changing the order of a subset of cities.

Mutations add diversity to the population when discovering new regions of the solution space.

Substitute: Parents and children together make up the new generation of solutions that will replace the old ones. A portion of the top-performing solutions from the previous generation may be retained in the new generation as part of a privileged replacement process.

Finalization: For a predetermined number of generations or until a convergence criterion is satisfied, repeat the selection, crossover, mutation, and replacement processes.

Enhancement: Modify variables like population size, crossover rate, and mutation rate to maximize the algorithm’s capacity to identify excellent TSP solutions.

When it comes to optimizing combinations, genetic algorithms are exceptional at sifting through big solution spaces and identifying superior answers. Because of their capacity to duplicate natural evolution, they can adjust to the TSP’s structure and find almost ideal tours. GAs are an effective tool in the field of evolutionary computation because they have been successfully applied to a wide range of optimization problems.

NextBillion.ai offers advanced Route Optimization API that solves real-life TSP and VRP problems, which can be easily integrated with your applications.

Book a demo Today!

In this Article

About author.

Rishabh is a freelance technical writer based in India. He is a technology enthusiast who loves working in the B2B tech space.

It is not possible to solve the Traveling Salesman Problem with Dijkstra’s algorithm. Dijkstra’s algorithm, a single-source shortest path algorithm, finds the shortest possible route from a given source node to every other node in a weighted graph. In comparison, the Traveling Salesman Problem looks for the quickest route that stops in every city exactly once before returning to the starting point.

The term “traveling salesman” refers to a scenario where a salesperson visits different cities to sell goods or services. The goal of this problem is to find the shortest route that goes to each city once and returns to the starting point. It is a fundamental problem in algorithmic optimization to determine the best order of city trips and minimize travel time or distance.

“Route salesman” or just “sales representative” are other terms for a traveling salesman. These people go to various places to sell goods and services to customers. The traveling salesman, who determines the shortest route to visit multiple cities, is often referred to as a “touring agent” or simply as the “salesman” in the context of mathematical optimization.

The minimum distance a salesman needs to visit each city exactly once and then return to the starting city is known as the shortest distance in the Traveling Salesman Problem (TSP). It stands for the ideal tour duration that reduces the total travel distance. The goal of solving the TSP, an essential issue in combinatorial optimization, is finding the shortest distance.

- Speakers & Mentors

- AI services

AI algorithms for travelling salesman problem

Revolutionize travel planning with ai algorithms for the traveling salesman problem. discover faster, smarter routes today.

Artificial Intelligence (AI) is a field that enables machines to mimic human intelligence. It has had a transformative impact on various sectors and domains, such as predicting weather patterns, personalizing customer experiences, and driving vehicles autonomously.

One of the problems that AI attempts to solve is the Travelling Salesman Problem (TSP), which is notable for its simplicity and complexity. The Traveling Salesman Problem (TSP) involves a salesman who must visit each city once, and return to the origin city, while minimizing the total distance traveled. Despite its straightforward premise, finding an efficient solution to TSP is a challenging task.

Understanding the Travelling Salesman Problem (TSP)

The Traveling Salesman Problem (TSP) is an optimization problem in graph theory. In this problem, the vertices represent the cities, the edges denote the paths between the cities, and the weight on the edges symbolizes the distance or cost. It involves finding the shortest possible route that visits each city once and returns to the starting point.

Although the problem may seem easy to comprehend, it belongs to the class of NP-Hard problems in computer science. This means that as the number of cities (n) increases, the complexity of solving the problem grows exponentially. Therefore, TSP is not only a fundamental problem in combinatorial optimization but also a benchmark for many optimization techniques.

Common Techniques to Solve TSP

Three common approaches to solving the TSP include Brute Force, Greedy Algorithms, and Dynamic Programming.

- Brute Force: This approach generates all possible routes and then selects the shortest one. While it guarantees a correct solution, the computational cost grows factorially with the number of cities, making it impractical for large instances of TSP.

- Greedy Algorithm: This heuristic approach starts at a random city, selects the closest unvisited city as the next destination, and repeats the process until all cities have been visited. While it’s fast, it often fails to find the optimal solution as it can get trapped in local minima.

- Dynamic Programming: This approach breaks down the problem into smaller subproblems, solves each subproblem only once, and stores their solutions. Although it finds the optimal solution, it has a high time and space complexity, making it inefficient for larger TSP instances.

Limitations of Conventional Techniques

Despite their utility, these traditional techniques have their shortcomings. The Brute Force and Dynamic Programming methods, while exact, have exponential time complexity and are infeasible for large-scale problems. On the other hand, the Greedy Algorithm, although efficient, can lead to suboptimal solutions.

These challenges point to a need for more sophisticated and efficient methods of solving the TSP—methods that leverage AI techniques.

Role of AI in Solving TSP

The use of AI and its subfield of Machine Learning (ML) has led to a significant change in solving complex optimization problems such as TSP. AI methods are appealing for TSP because they can learn from past experiences and refine their strategies, resulting in increasingly efficient solutions over time.

Unlike traditional approaches, AI algorithms can operate efficiently even as the number of cities increases. They provide a solution that is more adaptable, resilient, and scalable. They often find near-optimal solutions for large-scale instances of the TSP that are beyond the reach of exact methods.

Types of AI Algorithms Used to Solve TSP

AI brings a variety of algorithms to the table for addressing TSP. Notable among these are Genetic Algorithms, Ant Colony Optimization, and Simulated Annealing.

- Genetic Algorithm: Inspired by the process of natural selection, this algorithm operates on a population of potential solutions and uses mechanisms such as crossover, mutation, and selection to evolve the population toward better solutions.

- Ant Colony Optimization: This method simulates the behavior of ants seeking a path from their colony to food. The collective intelligence of the ants is harnessed to find the most efficient path.

- Simulated Annealing: This probabilistic technique is inspired by the annealing process in metallurgy. It provides a way to escape local minima by allowing less optimal solutions in early stages, thus facilitating exploration of the solution space.

Deep Dive into Genetic Algorithm

The Genetic Algorithm is a widely used and efficient AI algorithm for solving TSP. It starts with a population of randomly generated routes (chromosomes) that are evaluated based on their total distance (fitness).

The algorithm selects pairs of routes to reproduce based on their fitness, resulting in offspring that often inherit characteristics (genes) from both parents. This is accomplished through operations such as crossover, which involves exchanging genes between parents to create new offspring, and mutation, which introduces random changes to the offspring’s genes to maintain diversity in the population.

The algorithm refines the population through successive generations, ultimately converging on the most efficient route.

Deep Dive into Ant Colony Optimization

Ant Colony Optimization (ACO) is an influential AI algorithm used to tackle TSP. The algorithm is inspired by the foraging behavior of real ants and capitalizes on the concept of pheromone deposition and evaporation to guide the search for optimal solutions.

As ants move in search of food, they leave a pheromone trail. Ants follow stronger pheromone trails, reinforcing the shortest paths with pheromones while longer paths see their pheromone levels decrease due to evaporation.

In the context of TSP, each ant represents a possible solution or route. Ants construct solutions by moving from city to city, depositing a quantity of pheromone inversely proportional to the length of their tour. The colony uses the pheromone information to construct more efficient routes over time.

Deep Dive into Simulated Annealing

Simulated Annealing (SA) is a probabilistic technique used to approximate the global optimum of a given function. It is inspired by the annealing process in material science, where a material is heated and then slowly cooled to reduce defects. SA applies a similar principle to find the optimal solution in a large search space.

In the Traveling Salesman Problem (TSP), the SA algorithm begins with a random solution or tour. It then generates a new tour by making a small change to the current solution. If the new tour is shorter, it is accepted as the current solution. If the new tour is longer, it may still be accepted based on a probability determined by the ‘temperature’ parameter. The temperature is initially set high to allow the algorithm to accept suboptimal solutions, which facilitates exploration of the solution space. As the process continues, the temperature gradually decreases, reducing the chances of accepting worse solutions and allowing the algorithm to exploit the best solutions found. This approach helps simulated annealing avoid getting trapped in local minima, thereby increasing the likelihood of finding a near-optimal solution.

Comparative Analysis of AI Algorithms for TSP

The AI algorithms discussed in this text, namely Genetic Algorithm, Ant Colony Optimization, and Simulated Annealing, each have their own strengths and limitations.

Genetic Algorithm is particularly effective in maintaining a diverse set of solutions and exploiting the best parts of those solutions. However, it is sensitive to the choice of initial population and may require careful tuning of parameters such as crossover and mutation rates.

Ant Colony Optimization (ACO) is a robust and adaptive method that leverages the collective intelligence of a swarm. The continuous updating of pheromone trails facilitates the identification of good solutions. However, ACO may sometimes get stuck in a locally optimal solution and may also require careful parameter tuning.

On the other hand, Simulated Annealing (SA) excels in avoiding local minima due to its probabilistic nature. The algorithm provides a balance between exploration (searching new areas) and exploitation (searching around the best solution found so far). However, its performance is highly dependent on the cooling schedule and the choice of initial temperature.

The choice between these algorithms largely depends on the specifics of the problem at hand and the computational resources available.

Practical Implementations of AI in TSP

AI algorithms have proven to be remarkably successful in solving real-world instances of TSP. In logistics and supply chain management, these algorithms help in route optimization, reducing fuel consumption, and enhancing timely deliveries. In telecommunications, they aid in designing optimal paths for data routing to minimize latency.

Moreover, various online TSP solvers, powered by AI algorithms, are available to solve TSP instances interactively. These platforms enable users to input their custom TSP problems and provide near-optimal solutions quickly.

Future Trends in AI for TSP

As artificial intelligence (AI) continues to evolve, we can expect the development of more advanced and efficient algorithms for solving the Traveling Salesman Problem (TSP). Reinforcement learning, a type of machine learning in which an agent learns to make decisions by interacting with its environment, shows promise in solving TSP.

Additionally, hybrid models that combine the strengths of multiple AI algorithms could potentially offer more robust and versatile solutions. For example, combining Genetic Algorithm and Ant Colony Optimization can utilize the exploratory capabilities of genetic operations and the exploitative capabilities of pheromones.

Another promising area is deep learning, a subset of machine learning that models high-level abstractions in data through the use of multiple processing layers, which has potential for TSP. Preliminary research indicates that deep neural networks can approximate the solution of TSP instances. This is an exciting development in the field.

Challenges and Opportunities

Despite the promising advances, several challenges remain. Designing AI algorithms that can consistently and rapidly provide optimal solutions to large-scale TSP instances is a major challenge. Furthermore, tuning AI algorithms for specific problem instances and ensuring their robustness in the face of dynamic or uncertain problem parameters are crucial issues to address.

However, these challenges present opportunities for further research and innovation. The quest for developing more efficient and sophisticated AI algorithms for TSP is likely to yield valuable insights and techniques that can be applied to other complex optimization problems.

The Travelling Salesman Problem (TSP) represents a significant challenge in optimization, despite its seemingly simple premise. The advent of AI and its algorithms has revolutionized the way we approach TSP, providing efficient and scalable solutions. As AI continues to evolve, we can anticipate even more sophisticated and efficient techniques for solving TSP and other complex problems.

1. What makes the Travelling Salesman Problem (TSP) so challenging to solve?

Despite its simple premise, the TSP falls into a class of problems known as NP-Hard in computer science. As the number of cities increases, the complexity of finding the optimal solution grows exponentially, making it a challenging task.

2. How do AI algorithms address the TSP differently from conventional methods?

Unlike conventional methods, which can be computationally intensive or lead to suboptimal solutions, AI algorithms can learn from past experiences and refine their strategies over time. They provide a more adaptable, resilient, and scalable solution, often finding near-optimal solutions even for large-scale instances of the TSP.

3. What are some real-world applications of AI algorithms solving TSP?

AI algorithms are instrumental in solving real-world TSP instances in logistics for route optimization, reducing fuel consumption, and timely deliveries. In the telecommunications sector, they aid in designing optimal paths for data routing to minimize latency.

4. What is the future of AI in solving TSP?

The future of AI in solving TSP is promising. Reinforcement learning, hybrid models, and deep learning are among the emerging trends in AI that could potentially offer more efficient solutions to TSP.

5. What are the challenges in using AI for TSP?

While AI brings several advantages, challenges remain. Designing AI algorithms that consistently provide optimal solutions to large-scale TSP instances, tuning AI algorithms for specific problem instances, and ensuring their robustness in dynamic or uncertain situations are crucial issues that need addressing.

Related posts:

About the author

Walmart Revolutionizes Grocery Delivery with Cutting-Edge AI Technology

Timefold – the powerful planning optimization solver, yandex routing: the ultimate guide to route optimization, solving notorious traveling salesman with cutting-edge ai algorithms.

- Data Structures

- Linked List

- Binary Tree

- Binary Search Tree

- Segment Tree

- Disjoint Set Union

- Fenwick Tree

- Red-Black Tree

- Advanced Data Structures

- Dynamic Programming or DP

- What is memoization? A Complete tutorial

- Dynamic Programming (DP) Tutorial with Problems

- Tabulation vs Memoization

- Optimal Substructure Property in Dynamic Programming | DP-2

- Overlapping Subproblems Property in Dynamic Programming | DP-1

- Steps for how to solve a Dynamic Programming Problem

Advanced Topics

- Bitmasking and Dynamic Programming | Set 1 (Count ways to assign unique cap to every person)

- Digit DP | Introduction

- Sum over Subsets | Dynamic Programming

Easy problems in Dynamic programming

- Count all combinations of coins to make a given value sum (Coin Change II)

- Subset Sum Problem

- Introduction and Dynamic Programming solution to compute nCr%p

- Cutting a Rod | DP-13

- Painting Fence Algorithm

- Longest Common Subsequence (LCS)

- Longest Increasing Subsequence (LIS)

- Longest subsequence such that difference between adjacents is one

- Maximum size square sub-matrix with all 1s

- Min Cost Path | DP-6

- Longest Common Substring (Space optimized DP solution)

- Count ways to reach the nth stair using step 1, 2 or 3

- Count Unique Paths in matrix

- Unique paths in a Grid with Obstacles

Medium problems on Dynamic programming

- 0/1 Knapsack Problem

- Printing Items in 0/1 Knapsack

- Unbounded Knapsack (Repetition of items allowed)

- Egg Dropping Puzzle | DP-11

- Word Break Problem | DP-32

- Vertex Cover Problem (Dynamic Programming Solution for Tree)

- Tile Stacking Problem

- Box Stacking Problem | DP-22

- Partition problem | DP-18

Travelling Salesman Problem using Dynamic Programming

- Longest Palindromic Subsequence (LPS)

- Longest Common Increasing Subsequence (LCS + LIS)

- Find all distinct subset (or subsequence) sums of an array

- Weighted Job Scheduling

- Count Derangements (Permutation such that no element appears in its original position)

- Minimum insertions to form a palindrome | DP-28

- Ways to arrange Balls such that adjacent balls are of different types

Hard problems on Dynamic programming

- Palindrome Partitioning

- Word Wrap Problem

- The Painter's Partition Problem

- Program for Bridge and Torch problem

- Matrix Chain Multiplication | DP-8

- Printing brackets in Matrix Chain Multiplication Problem

- Maximum sum rectangle in a 2D matrix | DP-27

- Maximum profit by buying and selling a share at most k times

- Minimum cost to sort strings using reversal operations of different costs

- Count of AP (Arithmetic Progression) Subsequences in an array

- Introduction to Dynamic Programming on Trees

- Maximum height of Tree when any Node can be considered as Root

- Longest repeating and non-overlapping substring

- Top 20 Dynamic Programming Interview Questions

Travelling Salesman Problem (TSP):

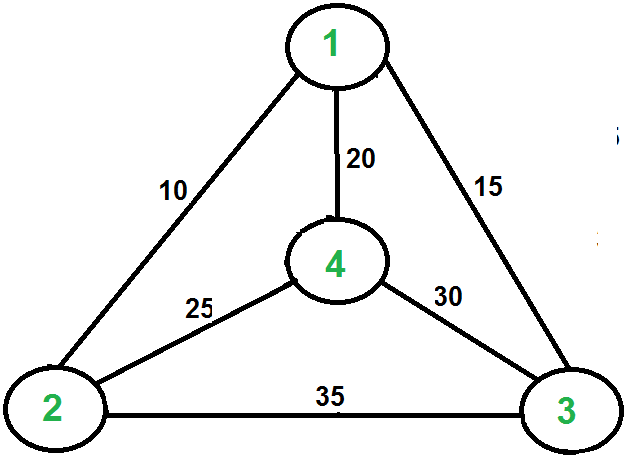

Given a set of cities and the distance between every pair of cities, the problem is to find the shortest possible route that visits every city exactly once and returns to the starting point. Note the difference between Hamiltonian Cycle and TSP. The Hamiltonian cycle problem is to find if there exists a tour that visits every city exactly once. Here we know that Hamiltonian Tour exists (because the graph is complete) and in fact, many such tours exist, the problem is to find a minimum weight Hamiltonian Cycle.

For example, consider the graph shown in the figure on the right side. A TSP tour in the graph is 1-2-4-3-1. The cost of the tour is 10+25+30+15 which is 80. The problem is a famous NP-hard problem. There is no polynomial-time know solution for this problem. The following are different solutions for the traveling salesman problem.

Naive Solution:

1) Consider city 1 as the starting and ending point.

2) Generate all (n-1)! Permutations of cities.

3) Calculate the cost of every permutation and keep track of the minimum cost permutation.

4) Return the permutation with minimum cost.

Time Complexity: ?(n!)

Dynamic Programming:

Let the given set of vertices be {1, 2, 3, 4,….n}. Let us consider 1 as starting and ending point of output. For every other vertex I (other than 1), we find the minimum cost path with 1 as the starting point, I as the ending point, and all vertices appearing exactly once. Let the cost of this path cost (i), and the cost of the corresponding Cycle would cost (i) + dist(i, 1) where dist(i, 1) is the distance from I to 1. Finally, we return the minimum of all [cost(i) + dist(i, 1)] values. This looks simple so far.

Now the question is how to get cost(i)? To calculate the cost(i) using Dynamic Programming, we need to have some recursive relation in terms of sub-problems.

Let us define a term C(S, i) be the cost of the minimum cost path visiting each vertex in set S exactly once, starting at 1 and ending at i . We start with all subsets of size 2 and calculate C(S, i) for all subsets where S is the subset, then we calculate C(S, i) for all subsets S of size 3 and so on. Note that 1 must be present in every subset.

Below is the dynamic programming solution for the problem using top down recursive+memoized approach:-

For maintaining the subsets we can use the bitmasks to represent the remaining nodes in our subset. Since bits are faster to operate and there are only few nodes in graph, bitmasks is better to use.

For example: –

10100 represents node 2 and node 4 are left in set to be processed

010010 represents node 1 and 4 are left in subset.

NOTE:- ignore the 0th bit since our graph is 1-based

Time Complexity : O(n 2 *2 n ) where O(n* 2 n) are maximum number of unique subproblems/states and O(n) for transition (through for loop as in code) in every states.

Auxiliary Space: O(n*2 n ), where n is number of Nodes/Cities here.

For a set of size n, we consider n-2 subsets each of size n-1 such that all subsets don’t have nth in them. Using the above recurrence relation, we can write a dynamic programming-based solution. There are at most O(n*2 n ) subproblems, and each one takes linear time to solve. The total running time is therefore O(n 2 *2 n ). The time complexity is much less than O(n!) but still exponential. The space required is also exponential. So this approach is also infeasible even for a slightly higher number of vertices. We will soon be discussing approximate algorithms for the traveling salesman problem.

Next Article: Traveling Salesman Problem | Set 2

References:

http://www.lsi.upc.edu/~mjserna/docencia/algofib/P07/dynprog.pdf

http://www.cs.berkeley.edu/~vazirani/algorithms/chap6.pdf

Please Login to comment...

Similar reads.

- Dynamic Programming

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

- Interview Q

DAA Tutorial

Asymptotic analysis, analysis of sorting, divide and conquer, lower bound theory, sorting in linear time, binary search trees, red black tree, dynamic programming, greedy algorithm, backtracking, shortest path, all-pairs shortest paths, maximum flow, sorting networks, complexity theory, approximation algo, string matching.

Interview Questions

- Send your Feedback to [email protected]

Help Others, Please Share

Learn Latest Tutorials

Transact-SQL

Reinforcement Learning

R Programming

React Native

Python Design Patterns

Python Pillow

Python Turtle

Preparation

Verbal Ability

Company Questions

Trending Technologies

Artificial Intelligence

Cloud Computing

Data Science

Machine Learning

B.Tech / MCA

Data Structures

Operating System

Computer Network

Compiler Design

Computer Organization

Discrete Mathematics

Ethical Hacking

Computer Graphics

Software Engineering

Web Technology

Cyber Security

C Programming

Control System

Data Mining

Data Warehouse

Travelling Salesman Problem: Python, C++ Algorithm

What is the Travelling Salesman Problem (TSP)?

Travelling Salesman Problem (TSP) is a classic combinatorics problem of theoretical computer science. The problem asks to find the shortest path in a graph with the condition of visiting all the nodes only one time and returning to the origin city.

The problem statement gives a list of cities along with the distances between each city.

Objective: To start from the origin city, visit other cities only once, and return to the original city again. Our target is to find the shortest possible path to complete the round-trip route.

Example of TSP

Here a graph is given where 1, 2, 3, and 4 represent the cities, and the weight associated with every edge represents the distance between those cities.

The goal is to find the shortest possible path for the tour that starts from the origin city, traverses the graph while only visiting the other cities or nodes once, and returns to the origin city.

For the above graph, the optimal route is to follow the minimum cost path: 1-2-4-3-1. And this shortest route would cost 10+25+30+15 =80

Different Solutions to Travelling Salesman Problem

Travelling Salesman Problem (TSP) is classified as a NP-hard problem due to having no polynomial time algorithm. The complexity increases exponentially by increasing the number of cities.

There are multiple ways to solve the traveling salesman problem (tsp). Some popular solutions are:

The brute force approach is the naive method for solving traveling salesman problems. In this approach, we first calculate all possible paths and then compare them. The number of paths in a graph consisting of n cities is n! It is computationally very expensive to solve the traveling salesman problem in this brute force approach.

The branch-and-bound method: The problem is broken down into sub-problems in this approach. The solution of those individual sub-problems would provide an optimal solution.

This tutorial will demonstrate a dynamic programming approach, the recursive version of this branch-and-bound method, to solve the traveling salesman problem.

Dynamic programming is such a method for seeking optimal solutions by analyzing all possible routes. It is one of the exact solution methods that solve traveling salesman problems through relatively higher cost than the greedy methods that provide a near-optimal solution.

The computational complexity of this approach is O(N^2 * 2^N) which is discussed later in this article.

The nearest neighbor method is a heuristic-based greedy approach where we choose the nearest neighbor node. This approach is computationally less expensive than the dynamic approach. But it does not provide the guarantee of an optimal solution. This method is used for near-optimal solutions.

Algorithm for Traveling Salesman Problem

We will use the dynamic programming approach to solve the Travelling Salesman Problem (TSP).

Before starting the algorithm, let’s get acquainted with some terminologies:

- A graph G=(V, E), which is a set of vertices and edges.

- V is the set of vertices.

- E is the set of edges.

- Vertices are connected through edges.

- Dist(i,j) denotes the non-negative distance between two vertices, i and j.

Let’s assume S is the subset of cities and belongs to {1, 2, 3, …, n} where 1, 2, 3…n are the cities and i, j are two cities in that subset. Now cost(i, S, j) is defined in such a way as the length of the shortest path visiting node in S, which is exactly once having the starting and ending point as i and j respectively.

For example, cost (1, {2, 3, 4}, 1) denotes the length of the shortest path where:

- Starting city is 1

- Cities 2, 3, and 4 are visited only once

- The ending point is 1

The dynamic programming algorithm would be:

- Set cost(i, , i) = 0, which means we start and end at i, and the cost is 0.

- When |S| > 1, we define cost(i, S, 1) = ∝ where i !=1 . Because initially, we do not know the exact cost to reach city i to city 1 through other cities.

- Now, we need to start at 1 and complete the tour. We need to select the next city in such a way-

cost(i, S, j)=min cost (i, S−{i}, j)+dist(i,j) where i∈S and i≠j

For the given figure, the adjacency matrix would be the following:

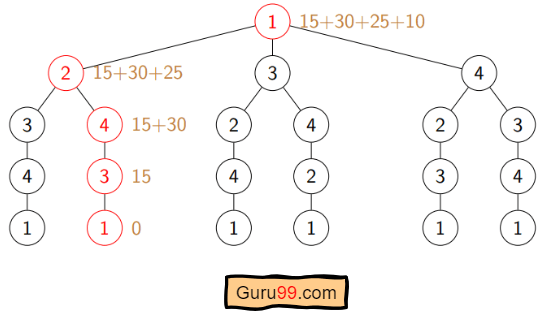

Let’s see how our algorithm works:

Step 1) We are considering our journey starting at city 1, visit other cities once and return to city 1.

Step 2) S is the subset of cities. According to our algorithm, for all |S| > 1, we will set the distance cost(i, S, 1) = ∝. Here cost(i, S, j) means we are starting at city i, visiting the cities of S once, and now we are at city j. We set this path cost as infinity because we do not know the distance yet. So the values will be the following:

Cost (2, {3, 4}, 1) = ∝ ; the notation denotes we are starting at city 2, going through cities 3, 4, and reaching 1. And the path cost is infinity. Similarly-

cost(3, {2, 4}, 1) = ∝

cost(4, {2, 3}, 1) = ∝

Step 3) Now, for all subsets of S, we need to find the following:

cost(i, S, j)=min cost (i, S−{i}, j)+dist(i,j), where j∈S and i≠j

That means the minimum cost path for starting at i, going through the subset of cities once, and returning to city j. Considering that the journey starts at city 1, the optimal path cost would be= cost(1, {other cities}, 1).

Let’s find out how we could achieve that:

Now S = {1, 2, 3, 4}. There are four elements. Hence the number of subsets will be 2^4 or 16. Those subsets are-

1) |S| = Null:

2) |S| = 1:

{{1}, {2}, {3}, {4}}

3) |S| = 2:

{{1, 2}, {1, 3}, {1, 4}, {2, 3}, {2, 4}, {3, 4}}

4) |S| = 3:

{{1, 2, 3}, {1, 2, 4}, {2, 3, 4}, {1, 3, 4}}

5) |S| = 4:

{{1, 2, 3, 4}}

As we are starting at 1, we could discard the subsets containing city 1.

The algorithm calculation:

1) |S| = Φ:

cost (2, Φ, 1) = dist(2, 1) = 10

cost (3, Φ, 1) = dist(3, 1) = 15

cost (4, Φ, 1) = dist(4, 1) = 20

cost (2, {3}, 1) = dist(2, 3) + cost (3, Φ, 1) = 35+15 = 50

cost (2, {4}, 1) = dist(2, 4) + cost (4, Φ, 1) = 25+20 = 45

cost (3, {2}, 1) = dist(3, 2) + cost (2, Φ, 1) = 35+10 = 45

cost (3, {4}, 1) = dist(3, 4) + cost (4, Φ, 1) = 30+20 = 50

cost (4, {2}, 1) = dist(4, 2) + cost (2, Φ, 1) = 25+10 = 35

cost (4, {3}, 1) = dist(4, 3) + cost (3, Φ, 1) = 30+15 = 45

cost (2, {3, 4}, 1) = min [ dist[2,3]+Cost(3,{4},1) = 35+50 = 85,

dist[2,4]+Cost(4,{3},1) = 25+45 = 70 ] = 70

cost (3, {2, 4}, 1) = min [ dist[3,2]+Cost(2,{4},1) = 35+45 = 80,

dist[3,4]+Cost(4,{2},1) = 30+35 = 65 ] = 65

cost (4, {2, 3}, 1) = min [ dist[4,2]+Cost(2,{3},1) = 25+50 = 75

dist[4,3]+Cost(3,{2},1) = 30+45 = 75 ] = 75

cost (1, {2, 3, 4}, 1) = min [ dist[1,2]+Cost(2,{3,4},1) = 10+70 = 80

dist[1,3]+Cost(3,{2,4},1) = 15+65 = 80

dist[1,4]+Cost(4,{2,3},1) = 20+75 = 95 ] = 80

So the optimal solution would be 1-2-4-3-1

Pseudo-code

Implementation in c/c++.

Here’s the implementation in C++ :

Implementation in Python

Academic solutions to tsp.

Computer scientists have spent years searching for an improved polynomial time algorithm for the Travelling Salesman Problem. Until now, the problem is still NP-hard.

Though some of the following solutions were published in recent years that have reduced the complexity to a certain degree:

- The classical symmetric TSP is solved by the Zero Suffix Method.

- The Biogeography‐based Optimization Algorithm is based on the migration strategy to solve the optimization problems that can be planned as TSP.

- Multi-Objective Evolutionary Algorithm is designed for solving multiple TSP based on NSGA-II.

- The Multi-Agent System solves the TSP of N cities with fixed resources.

Application of Traveling Salesman Problem

Travelling Salesman Problem (TSP) is applied in the real world in both its purest and modified forms. Some of those are:

- Planning, logistics, and manufacturing microchips : Chip insertion problems naturally arise in the microchip industry. Those problems can be planned as traveling salesman problems.

- DNA sequencing : Slight modification of the traveling salesman problem can be used in DNA sequencing. Here, the cities represent the DNA fragments, and the distance represents the similarity measure between two DNA fragments.

- Astronomy : The Travelling Salesman Problem is applied by astronomers to minimize the time spent observing various sources.

- Optimal control problem : Travelling Salesman Problem formulation can be applied in optimal control problems. There might be several other constraints added.

Complexity Analysis of TSP

So the total time complexity for an optimal solution would be the Number of nodes * Number of subproblems * time to solve each sub-problem. The time complexity can be defined as O(N 2 * 2^N).

- Space Complexity: The dynamic programming approach uses memory to store C(S, i), where S is a subset of the vertices set. There is a total of 2 N subsets for each node. So, the space complexity is O(2^N).

Next, you’ll learn about Sieve of Eratosthenes Algorithm

- Linear Search: Python, C++ Example

- DAA Tutorial PDF: Design and Analysis of Algorithms

- Heap Sort Algorithm (With Code in Python and C++)

- Kadence’s Algorithm: Largest Sum Contiguous Subarray

- Radix Sort Algorithm in Data Structure

- Doubly Linked List: C++, Python (Code Example)

- Singly Linked List in Data Structures

- Adjacency List and Matrix Representation of Graph

Help | Advanced Search

Computer Science > Artificial Intelligence

Title: distilling privileged information for dubins traveling salesman problems with neighborhoods.

Abstract: This paper presents a novel learning approach for Dubins Traveling Salesman Problems(DTSP) with Neighborhood (DTSPN) to quickly produce a tour of a non-holonomic vehicle passing through neighborhoods of given task points. The method involves two learning phases: initially, a model-free reinforcement learning approach leverages privileged information to distill knowledge from expert trajectories generated by the LinKernighan heuristic (LKH) algorithm. Subsequently, a supervised learning phase trains an adaptation network to solve problems independently of privileged information. Before the first learning phase, a parameter initialization technique using the demonstration data was also devised to enhance training efficiency. The proposed learning method produces a solution about 50 times faster than LKH and substantially outperforms other imitation learning and RL with demonstration schemes, most of which fail to sense all the task points.

Submission history

Access paper:.

- HTML (experimental)

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

A longevity researcher is facing backlash for claiming to 'reverse aging.' Scientists say there's no consensus on what it means.

- Harvard researcher Dr. David Sinclair is facing backlash in the longevity community.

- Critics argue that he took claims about "reverse aging" too far, The Wall Street Journal reported .

- Longevity medicine is attracting billions in investment from the world's wealthiest execs.

Harvard researcher Dr. David Sinclair has found himself at the center of controversy within the longevity community.

Sinclair has been a poster child of the longevity movement for years. He's built several biotechnology companies focused on reversing the effects of aging, won acclaim for his research , and cultivated a loyal base of fans who swear by his lifestyle tips .

He's also earned his share of critics who say his research isn't always backed up by sufficient evidence. But over the past months, The Wall Street Journal reported that Sinclair has been battling a new level of backlash from colleagues and researchers who say his claims on curing aging have gone too far.

A matter of semantics?

The controversy began on February 29, when Sinclair's dog-supplement company, Animal Biosciences, issued a press release.

"I am very proud of the teams at NCSU and Animal Biosciences, who, after years of collaborative research and a clinical trial, have developed the first supplement proven to reverse aging in dogs," Sinclair said in the release, according to Newsweek .

Sinclair contends that he was misquoted. "The actual quote that I had approved was 'proven to reverse the effects of aging in dogs,'" he told the Journal, adding, "I felt this was a reasonable statement."

Related stories

Scientists rushed to contest the claim. "The data is not good, you're calling it the wrong thing, and then you're selling it," Dr. Nir Barzilai told the Journal. "The selling is a step too far." Dozens of scientists resigned from The Academy for Health and Lifespan Research — a nonprofit organization of longevity researchers that Sinclair cofounded and headed as president.

Dr. Matt Kaeberlein — a biologist who was among the throng of resignees — described Sinclair on X as the definition of a "snake oil salesman."

After careful consideration, I have renounced my membership in the Academy for Health and Lifespan Research ( @ahlresearch ). I find it deeply distressing that we’ve gotten to a point where dishonesty in science is normalized to an extent that nobody is shocked when a tenured… — Matt Kaeberlein (@mkaeberlein) March 3, 2024

Sinclair resigned from the Academy in March, the Journal reported based on an email circulated by the Academy. Barzilai has since taken over as president.

Dr. Sinclair did not respond to a request for comment from Business Insider.

Animal Biosciences reissued a press release walking back the "reverse aging" claim. But scientists in the field say the issue is even more fundamental: There's no way to reverse aging, much less measure it.

The concept of biological age — the true age that our cells, tissues, and organ systems appear to be, based on biochemistry, according to the National Institute on Aging — is gaining traction in longevity circles. Yet, it's still a fuzzy and controversial concept because there's no standard for normal aging . The way we age varies a lot from person to person.

Experts working to standardize longevity medicine say it could take years before it'll be recognized as an official field like cardiology or neurology, according to the MIT Technology Review . "This is a new field," Andrea Maier of the National University of Singapore and cofounder of the private "high-end" Chi Longevity clinic told MIT Technology Review. "We have to organize ourselves; we have to set standards."

Still, billions of dollars are being funneled into research. Longevity startups drew a global investment of more than $5.2 billion in 2022, according to PitchBook. And those backed by the world's wealthiest executives like Jeff Bezos and Peter Thiel are dedicated to studying cellular aging — and its cures. That means debates about the semantics of aging will only become more relevant to our daily lives.

- Main content

COMMENTS

However, working with problem-solving in the artificial intelligence (AI) field, it is difficult to specify a formulation of a problem from the beginning. Therefore, the flexibility of choosing a solution procedure during the observation of state changes is highly required. ... In this post, I will introduce Traveling Salesman Problem (TSP) as ...

Travelling Salesman Problem (TSP) : Given a set of cities and distances between every pair of cities, the problem is to find the shortest possible route that visits every city exactly once and returns to the starting point. Note the difference between Hamiltonian Cycle and TSP. The Hamiltonian cycle problem is to find if there exists a tour that visits every city exactly once.

The generalized travelling salesman problem, also known as the "travelling politician problem", deals with "states" that have (one or more) "cities", and the salesman must visit exactly one city from each state. One application is encountered in ordering a solution to the cutting stock problem in order to minimize knife changes.

The Traveling Salesman Problem (TSP) has been solved for many years and used for tons of real-life situations including optimizing deliveries or network routing. This article will show a simple framework to apply Q-Learning to solving the TSP, and discuss the pros & cons with other optimization techniques.

A new AI processor has extended the traveling salesman solution from 16 nodes to 22.; The traveling salesman is an age-old exercise in optimization, studied in school and relevant to "real life." ...

The Travelling Salesman Problem (TSP) is a well-known problem in the field of Artificial Intelligence (AI) and Machine Learning. It involves finding the shortest possible route for a salesman to visit a number of cities and return to the starting point.

The origin of the traveling salesman problem is not very clear; it is mentioned in an 1832 manual for traveling salesman, which included example tours of 45 German cities but was not formulated as a mathematical problem. However, in the 1800s, mathematicians William Rowan Hamilton and Thomas Kirkman devised mathematical formulations of the problem.

The traveling salesman problem (TSP) is arguably one of the most iconic and challenging combinatorial optimization problems in computer science and operations research. At its core, this notorious NP-hard problem deals with finding the shortest route visiting each city in a given list exactly once, before returning to the starting city.

This scenario resembles the famous traveling salesman problem that has been a thorn in the side of computer scientists for decades. A TSP tour that visits a city in all 49 contiguous U.S. states.

The Traveling Salesperson Problem (TSP) is one of the most popular NP-hard combinatorial problems in the theoretical computer science and operations research (OR) community. It asks the following question: "Given a list of cities and the distances between each pair of cities, what is the shortest possible route that visits each city exactly once and […]

ACO, or Ant Colony Optimization, is a metaheuristic algorithm that draws inspiration from ants' seeking habits. It works very well for resolving a combination of optimization issues, such as the TSP (Traveling Salesman Problem). The idea behind ACO is to imitate ant colonies' chemical trail communication to determine the best routes.

Artificial Intelligence (AI) is a field that enables machines to mimic human intelligence. It has had a transformative impact on various sectors and domains, such as predicting weather patterns, personalizing customer experiences, and driving vehicles autonomously. One of the problems that AI attempts to solve is the Travelling Salesman Problem ...

The traveling salesperson problem is one of a handful of foundational problems that theoretical computer scientists turn to again and again to test the limits of efficient computation. The new result "is the first step towards showing that the frontiers of efficient computation are in fact better than what we thought," Williamson said.

The traveling salesman problem (TSP) involves finding the shortest path that visits n specified locations, starting and ending at the same place and visiting the other n-1 destinations exactly ...